#you need input... To create output.... there is no input

Explore tagged Tumblr posts

Text

i have had absolutely zero inspiration for new art or fics which surely has nothing to do with the fact i am squandering each and every day of my summer so far

8 notes

·

View notes

Text

The conversation around AI is going to get away from us quickly because people lack the language to distinguish types of AI--and it's not their fault. Companies love to slap "AI" on anything they believe can pass for something "intelligent" a computer program is doing. And this muddies the waters when people want to talk about AI when the exact same word covers a wide umbrella and they themselves don't know how to qualify the distinctions within.

I'm a software engineer and not a data scientist, so I'm not exactly at the level of domain expert. But I work with data scientists, and I have at least rudimentary college-level knowledge of machine learning and linear algebra from my CS degree. So I want to give some quick guidance.

What is AI? And what is not AI?

So what's the difference between just a computer program, and an "AI" program? Computers can do a lot of smart things, and companies love the idea of calling anything that seems smart enough "AI", but industry-wise the question of "how smart" a program is has nothing to do with whether it is AI.

A regular, non-AI computer program is procedural, and rigidly defined. I could "program" traffic light behavior that essentially goes { if(light === green) { go(); } else { stop();} }. I've told it in simple and rigid terms what condition to check, and how to behave based on that check. (A better program would have a lot more to check for, like signs and road conditions and pedestrians in the street, and those things will still need to be spelled out.)

An AI traffic light behavior is generated by machine-learning, which simplistically is a huge cranking machine of linear algebra which you feed training data into and it "learns" from. By "learning" I mean it's developing a complex and opaque model of parameters to fit the training data (but not over-fit). In this case the training data probably includes thousands of videos of car behavior at traffic intersections. Through parameter tweaking and model adjustment, data scientists will turn this crank over and over adjusting it to create something which, in very opaque terms, has developed a model that will guess the right behavioral output for any future scenario.

A well-trained model would be fed a green light and know to go, and a red light and know to stop, and 'green but there's a kid in the road' and know to stop. A very very well-trained model can probably do this better than my program above, because it has the capacity to be more adaptive than my rigidly-defined thing if the rigidly-defined program is missing some considerations. But if the AI model makes a wrong choice, it is significantly harder to trace down why exactly it did that.

Because again, the reason it's making this decision may be very opaque. It's like engineering a very specific plinko machine which gets tweaked to be very good at taking a road input and giving the right output. But like if that plinko machine contained millions of pegs and none of them necessarily correlated to anything to do with the road. There's possibly no "if green, go, else stop" to look for. (Maybe there is, for traffic light specifically as that is intentionally very simplistic. But a model trained to recognize written numbers for example likely contains no parameters at all that you could map to ideas a human has like "look for a rigid line in the number". The parameters may be all, to humans, meaningless.)

So, that's basics. Here are some categories of things which get called AI:

"AI" which is just genuinely not AI

There's plenty of software that follows a normal, procedural program defined rigidly, with no linear algebra model training, that companies would love to brand as "AI" because it sounds cool.

Something like motion detection/tracking might be sold as artificially intelligent. But under the covers that can be done as simply as "if some range of pixels changes color by a certain amount, flag as motion"

2. AI which IS genuinely AI, but is not the kind of AI everyone is talking about right now

"AI", by which I mean machine learning using linear algebra, is very good at being fed a lot of training data, and then coming up with an ability to go and categorize real information.

The AI technology that looks at cells and determines whether they're cancer or not, that is using this technology. OCR (Optical Character Recognition) is the technology that can take an image of hand-written text and transcribe it. Again, it's using linear algebra, so yes it's AI.

Many other such examples exist, and have been around for quite a good number of years. They share the genre of technology, which is machine learning models, but these are not the Large Language Model Generative AI that is all over the media. Criticizing these would be like criticizing airplanes when you're actually mad at military drones. It's the same "makes fly in the air" technology but their impact is very different.

3. The AI we ARE talking about. "Chat-gpt" type of Generative AI which uses LLMs ("Large Language Models")

If there was one word I wish people would know in all this, it's LLM (Large Language Model). This describes the KIND of machine learning model that Chat-GPT/midjourney/stablediffusion are fueled by. They're so extremely powerfully trained on human language that they can take an input of conversational language and create a predictive output that is human coherent. (I am less certain what additional technology fuels art-creation, specifically, but considering the AI art generation has risen hand-in-hand with the advent of powerful LLM, I'm at least confident in saying it is still corely LLM).

This technology isn't exactly brand new (predictive text has been using it, but more like the mostly innocent and much less successful older sibling of some celebrity, who no one really thinks about.) But the scale and power of LLM-based AI technology is what is new with Chat-GPT.

This is the generative AI, and even better, the large language model generative AI.

(Data scientists, feel free to add on or correct anything.)

3K notes

·

View notes

Text

wonyoung rules to be that girl ✧

Protect your energy : ur energy is precious, and you deserve to feel light and at peace. If someone consistently brings stress or heaviness into your life, it's okay to create distance (idk why ppl think it's a bad thing) . When people make you doubt yourself or fill you with worry especially when you're ready to pursue something meaningful trust your instincts and step back. honor your wellbeing. You have permission to protect your peace always at any time

Create sacred rhythms : there's something beautiful about starting and ending your day with intention. Consider creating gentle morning and evening routines that feel nourishing rather than demanding. Perhaps it's journaling with your coffee, setting a quiet intention for the day, or simply sitting with yourself for a few moments without thinking "oh no this bad will go bad" . When you give structure to your mornings and evenings, your days naturally flow with more purpose and less chaos.

Embrace balance: Life isn't meant to be lived at full intensity all the time. You don't need to be productive every moment to be worthy or successful. Think of yourself like water sometimes flowing rapidly, sometimes still and reflective. Rest isn't the opposite of productivity it's what makes sustained growth possible. Even wonyoung is have idol off mode . Allow yourself to have hobbies, to laugh with friends, to do absolutely nothing sometimes. Your worth isn't measured by your output and taking breaks doesn't make you lazy it makes you a balanced person .

Cultivate gratitude : Gratitude is like gentle sunlight for the soul. Instead of focusing on what's missing from your life, try turning your attention to what's already here. The simple fact that you woke up this morning, that you can learn and grow, that there are people who care about you this is everything. When you notice the abundance that already exists in your life you'll find that even ordinary moments begin to shimmer with meaning.

Hold criticism lightly : Not every opinion about you deserves a home in your heart. Some feedback comes from love and wisdom like when someone who truly cares for you offers gentle guidance. This kind of input is worth considering. But criticism rooted in judgment or negativity let it pass through you like wind through trees. You can listen without absorbing, consider without accepting. Trust your inner wisdom to know the difference between feedback that serves your growth and words that only serve to diminish you.

Choose kindness : Kindness is one of the most powerful forces in the world, and it costs nothing to give. When you approach others with genuine warmth and openness, you create ripples of goodness that extend far beyond what you can see. You don't need to reserve your kindness only for certain people everyone you meet is fighting battles you know nothing about. A gentle word, a helping hand, or simply treating someone with dignity can transform their entire day. In a world that sometimes feels harsh your kindness becomes a light that others will remember long after the moment has passed.

This is your life : not everything you do needs to make sense to other people.If it makes you happy and it doesn’t hurt you or anyone else then it’s enough. You don’t have to explain why you like taking mirror selfies, or dressing up for no reason or ..or ... . You don’t have to justify your routines, your joys, your weird little habits. The world will always have opinions that’s just how it is. But you’re not here to please them you’re here to live ur own life . So if something makes you feel calm, or safe, or more like you keep it. Ur life doesn’t need to look like anyone else’s.

@bloomzone ⌨️

#bloomtifully#bloomivation#bloomdiary#luckyboom#lucky vicky#wonyoungism#becoming that girl#ive wonyoung#creator of my reality#glow up#divine feminine#dream life#it girl#wonyoung#just girly things#just girly thoughts#just girly posts#girly stuff#self growth#self love#self confidence#self development#self improvement#dream girl journey#dream girl tips#girlblogger

254 notes

·

View notes

Text

your dream life is jealous of how much time you spend doomscrolling.

hey sweethearts!! mindy hereeeee, so i've realized something… like how we're all literally addicted to our phones?? and how our dream lives are sitting somewhere in the corner of our minds, pouting and wondering why we never hang out anymore??

i had this moment last week where i realized i'd spent THREE HOURS scrolling through videos of people organizing their fridges (which like… is satisfying but also?? what am i doing with my life). and then i had this thought that actually shook me: what if my future self could see how i'm spending my time right now? would she be proud or would she be like "girl… what are you DOING?"

the truth is that our phones are literally engineered to be more interesting than our real lives. they're designed by actual geniuses who understand our brain chemistry better than we do. it's not a fair fight!! and yet we blame ourselves for not having "enough willpower" which is honestly just mean??

✧ why we're all trapped in the doom-scroll cycle:

our phones deliver perfectly timed dopamine hits (the happy brain chemical!!) that make us feel momentarily good but leave us wanting more

the algorithm knows exactly what will keep us scrolling (it's literally studying us)

our brains are wired to seek novelty and our phones offer infinite novelty

real life has friction and requires effort; scrolling requires zero effort

we use our phones to escape uncomfortable emotions that actually need processing

the comparison trap makes us feel like we're "researching" our dream life rather than building it

i realized something that changed everything for me: the time i spend consuming other people's lives is time i'm not creating my own. and like… that's the whole game??

✧ how to break free (in ways that actually work):

identify your "scroll triggers" - for me it's when i feel anxious about my work, when i first wake up, and weirdly when i'm hungry?? once you know your triggers you can create little alternate pathways

create "phone-free zones" in your home - i have a little basket by my front door where my phone goes when i come home, and my bedroom is completely phone-free (i bought an actual alarm clock like it's 2005 and honestly?? life-changing)

practice the "dopamine pause" - when you feel the urge to reach for your phone, pause for 60 seconds. just sit with the discomfort. often the urge will pass, and if it doesn't, at least you're making a conscious choice

redesign your home screen to be boring af - delete all social apps from your home screen, make everything grayscale, turn off all notifications except calls/texts from actual humans who matter

schedule specific "input" and "output" times - block 30 minutes for consumption and 90 minutes for creation. your ratio should always favor creation over consumption

try "analog hour" before bed - read physical books, write with pen and paper, stare at the ceiling and let your mind wander (this is where all my best ideas come from tbh)

use the "future self" visualization - whenever you're about to fall into a scroll hole, close your eyes and visualize your future self. what would she want you to do with this precious hour of your life?

create ✧ focus-core ✧ routines - these are deeply satisfying rituals that give your brain the same dopamine hit as scrolling but actually build toward your dreams (for me it's making fancy coffee while listening to a specific playlist, then writing for 45 minutes)

practice "productive procrastination" - if you absolutely must avoid your main task, have a secondary important task ready (like if i don't want to write, i'll organize my study materials instead)

implement the "touch it once" rule - when you pick up your phone, have a specific purpose and do ONLY that thing, then put it down

the hardest truth i've had to accept is that there's no magic hack that makes this easy. creating a life that's more interesting than your phone requires actually building that life brick by brick, day by day. and the beginning is SO HARD because your brain is literally withdrawing from its favorite drug.

but i promise you something magical happens after about two weeks - you start to feel… different?? more present? more alive? and you realize that all along, the life you were searching for in your phone was waiting for you to look up.

your dream life is waiting for you to stop watching other people live theirs and start building your own. it's jealous of your phone, yes, but it's also patient. it knows that eventually, you'll come home to yourself.

xoxo, mindy 🤍

p.s. if you catch yourself scrolling after reading this, please don't feel bad!! just gently put your phone down, take a deep breath, and remember that you're breaking a literal addiction. be kind to yourself through the process, okay? tiny steps in the right direction are still steps. 💗

#dopaminedetox#digitalminimalism#focuscore#mindfulness#phoneaddiction#doomscrolling#productivity#selfimprovement#glowettee#coquette#socialmediabreak#intentionalliving#mindsetshift#dreamlife#screentime#digitalwellness#phonedetox#mentalhealth#healthyboundaries#focusroutine#tumblradvice#slowliving#presentmoment#phonehabit#consciousliving#girlytips#studygram#cozyadvice#girlblogger#girl interrupted

165 notes

·

View notes

Text

Often when I post an AI-neutral or AI-positive take on an anti-AI post I get blocked, so I wanted to make my own post to share my thoughts on "Nightshade", the new adversarial data poisoning attack that the Glaze people have come out with.

I've read the paper and here are my takeaways:

Firstly, this is not necessarily or primarily a tool for artists to "coat" their images like Glaze; in fact, Nightshade works best when applied to sort of carefully selected "archetypal" images, ideally ones that were already generated using generative AI using a prompt for the generic concept to be attacked (which is what the authors did in their paper). Also, the image has to be explicitly paired with a specific text caption optimized to have the most impact, which would make it pretty annoying for individual artists to deploy.

While the intent of Nightshade is to have maximum impact with minimal data poisoning, in order to attack a large model there would have to be many thousands of samples in the training data. Obviously if you have a webpage that you created specifically to host a massive gallery poisoned images, that can be fairly easily blacklisted, so you'd have to have a lot of patience and resources in order to hide these enough so they proliferate into the training datasets of major models.

The main use case for this as suggested by the authors is to protect specific copyrights. The example they use is that of Disney specifically releasing a lot of poisoned images of Mickey Mouse to prevent people generating art of him. As a large company like Disney would be more likely to have the resources to seed Nightshade images at scale, this sounds like the most plausible large scale use case for me, even if web artists could crowdsource some sort of similar generic campaign.

Either way, the optimal use case of "large organization repeatedly using generative AI models to create images, then running through another resource heavy AI model to corrupt them, then hiding them on the open web, to protect specific concepts and copyrights" doesn't sound like the big win for freedom of expression that people are going to pretend it is. This is the case for a lot of discussion around AI and I wish people would stop flagwaving for corporate copyright protections, but whatever.

The panic about AI resource use in terms of power/water is mostly bunk (AI training is done once per large model, and in terms of industrial production processes, using a single airliner flight's worth of carbon output for an industrial model that can then be used indefinitely to do useful work seems like a small fry in comparison to all the other nonsense that humanity wastes power on). However, given that deploying this at scale would be a huge compute sink, it's ironic to see anti-AI activists for that is a talking point hyping this up so much.

In terms of actual attack effectiveness; like Glaze, this once again relies on analysis of the feature space of current public models such as Stable Diffusion. This means that effectiveness is reduced on other models with differing architectures and training sets. However, also like Glaze, it looks like the overall "world feature space" that generative models fit to is generalisable enough that this attack will work across models.

That means that if this does get deployed at scale, it could definitely fuck with a lot of current systems. That said, once again, it'd likely have a bigger effect on indie and open source generation projects than the massive corporate monoliths who are probably working to secure proprietary data sets, like I believe Adobe Firefly did. I don't like how these attacks concentrate the power up.

The generalisation of the attack doesn't mean that this can't be defended against, but it does mean that you'd likely need to invest in bespoke measures; e.g. specifically training a detector on a large dataset of Nightshade poison in order to filter them out, spending more time and labour curating your input dataset, or designing radically different architectures that don't produce a comparably similar virtual feature space. I.e. the effect of this being used at scale wouldn't eliminate "AI art", but it could potentially cause a headache for people all around and limit accessibility for hobbyists (although presumably curated datasets would trickle down eventually).

All in all a bit of a dick move that will make things harder for people in general, but I suppose that's the point, and what people who want to deploy this at scale are aiming for. I suppose with public data scraping that sort of thing is fair game I guess.

Additionally, since making my first reply I've had a look at their website:

Used responsibly, Nightshade can help deter model trainers who disregard copyrights, opt-out lists, and do-not-scrape/robots.txt directives. It does not rely on the kindness of model trainers, but instead associates a small incremental price on each piece of data scraped and trained without authorization. Nightshade's goal is not to break models, but to increase the cost of training on unlicensed data, such that licensing images from their creators becomes a viable alternative.

Once again we see that the intended impact of Nightshade is not to eliminate generative AI but to make it infeasible for models to be created and trained by without a corporate money-bag to pay licensing fees for guaranteed clean data. I generally feel that this focuses power upwards and is overall a bad move. If anything, this sort of model, where only large corporations can create and control AI tools, will do nothing to help counter the economic displacement without worker protection that is the real issue with AI systems deployment, but will exacerbate the problem of the benefits of those systems being more constrained to said large corporations.

Kinda sucks how that gets pushed through by lying to small artists about the importance of copyright law for their own small-scale works (ignoring the fact that processing derived metadata from web images is pretty damn clearly a fair use application).

1K notes

·

View notes

Text

I am once again reminding people that Vocaloid and other singing synthesizers are not the same as those AI voice models made from celebrities and cartoon characters and the like.

Singing synthesizers are virtual instruments. Vocaloids use audio samples of real human voices the way some other virtual instruments will sample real guitars and pianos and the like, but they still need to be "played", per say, and getting good results requires a lot of manual manipulation of these samples within a synthesis engine.

Crucially, though, the main distinction here is consent. Commercial singing synthesizers are made by contracting vocalists to use their voices to create these sample libraries. They agree to the process and are compensated for their time and labor.

Some synthesizer engines like Vocaloid and Synthesizer V do have "AI" voice libraries, meaning that part of the rendering process involves using an AI model trained on data from the voice provider singing in order to ideally result in more naturalistic synthesis, but again, this is done with consent, and still requires a lot of manual input on the part of the user. They are still virtual instruments, not voice clones that auto-generate output based on prompts.

In fact, in the DIY singing synth community, making voice libraries out of samples you don't have permission to use is generally frowned upon, and is a violation of most DIY engines' terms of service, such as UTAU.

Please do research before jumping to conclusions about anything that remotely resembles AI generation. Also, please think through your anti-AI stance a little more than "technology bad"; think about who it hurts and when it hurts them so you can approach it from an informed, critical perspective rather than just something to be blindly angry about. You're not helping artists/vocalists/etc. if you aren't focused on combating actual theft and exploitation.

#long post#last post I'm making about this I'm tired of commenting on other posts about it lol#500#1k

2K notes

·

View notes

Note

Do you have any like relationship personality dynamics? Like flirty and grumpy but a bit more specific? Or general. Thanks

List of Dating Styles for Your Romance Novel

Here's a list of popular romance tropes I made! If you're looking for specific dynamics between characters, I think that post would help.

I'll focus on various dating styles your characters can have. You can have fun matching one style to another to create various relationship dynamics!

Flirty: Throwing cheezy comments without batting an eyelash, teasing, having fun watching the other turn into a tomato

Mother/Father: always nagging their partner, giving advice, putting up with their bad days with patience (not in a toxic way, please run from people who actually tries to be a parent rather than a lover)

Grumpy: Someone who always complains about what their partner does/tries to make them do...but does it anyway with actual effort.

Sunshine: Generally naive, upbeat characters who makes their partners smile.

Nerdy: Lost in their own thoughts, can talk about their interest for hours on end but will listen to you also, you can never be confused about what they want for their birthday.

People Pleaser: Someone who believes they need to adapt themselve to the other person to make the relationship work. They're nice, funny, comfortable to be around but once you try to unveil their true self, things will get complicated fast.

The Committed Robot: Look, they might be a little slow to picking up the subtle cues, but if you program them right, a reliable output for each input is assured... They WILL cry with you, even though they're acting with a mindful of question marks.

Independent: They don't believe in relying on their partners and prefer to be given time to sort through their problems. Sometimes, maybe a little too compulsively.

The No. 1 Fan: They will love and cheer you like a fan would love their favorite artist. Compliments and support during the hard times, trusts more in you than you do in yourself.

The Wolf: Sexual innuendo? Check. Romantic moods and open to talk about sex? Check. Respect? Check.

Hope this helps!

─── ・ 。゚☆: *.☽ .* . ───

💎If you like my blog, buy me a coffee☕ and find me on instagram!

💎Before you ask, check out my masterpost part 1 and part 2

💎For early access to my content, become a Writing Wizard

#writing#writers and poets#writeblr#let's write#helping writers#poets and writers#creative writers#writers on tumblr#creative writing#resources for writers#writing process#writing prompt#writing community#writing inspiration#writing advice#writing ideas#writer#on writing#writer stuff#writer on tumblr#writer things#writer community#writer problems#writerscommunity#writblr#writing practice#romance#romance tropes#romance novels#writers life

179 notes

·

View notes

Note

Do you often use random dungeon layout generators for your megadungeons? If so, how do you make those randomly generated layouts make sense as a space? I find that the eclectic nature of how the dungeon ends up looking makes it feel weird to consider the area as a real space instead of as the output to a random generator.

I use a lot of random generation when I make megadungeons, but I pretty much never use a layout generator. That's a solution to a different problem from the one that I have. Creating an assortment of rooms connected in random ways is pretty easy for me. The problem, as you note, is making the space engaging, making it make sense, making the connections logical but also interesting, etc.

But I do think random generation is a great way to juice your creativity! Getting external input that you then have to fit your ideas into often produces better results than just trying to create on a blank slate.

My most common random tools are roll tables for generating dungeon rooms and features. Worlds Without Number is the first book I reach for for most random tables, and it has some pretty solid tables for generating rooms, features in the rooms, connections, etc. I also have a bunch of tables saved from OSR blogs for generating interesting traps or dungeon features. Honestly just rolling for the number of exits a space has is one of the simplest ways to force myself to think creatively. When the dice tell me a bedroom has six exits, it means I need to re-evaluate what that bedroom is doing and I probably need to create some unusual exits.

I will use geomorphs sometimes. These are basically bespoke little fragments of dungeon created to be shuffled and combined randomly. Dyson Logos has a bunch of these, and I know @imsobadatnicknames2 has a bunch as well. These are good for creating a bunch of interesting connections and clever tiny bits that are great for finding interesting uses for. I've never used these to generate a whole dungeon, but for small fragments I really like them. I also have a set of them handy when I run a sandbox campaign in case the players somehow end up in a dungeon I didn't prep for at all.

Now, if you do want to use a randomly generated layout, whether from some tool, a dice generator, geomorphs, whatever, I have some advice for making sense of it: embrace the second occupant effect.

It's very common in dungeons that the people who built the dungeon and the current occupants are not the same group. It's an orcish ruin occupied by dwarves, it's an ancient temple being used as a bandit hideout, it's a wizard's keep overrun by demons, etc. The question that a random layout is going to have you asking is, "Why is this constructed this way?" and it's perfectly okay for the answer to be, "there's nobody left who knows." What was this big room with seven entrances built for? Well, nobody knows, but the goblins living there are using it as a dining hall.

If you're designing using this approach, you don't need an answer for every space. You can instead approach it the same way its new occupants did. Take it for granted that this is the space that exists, how would the new occupants use it? That weird room off to the side that's a pain to access? Well, who knows what it was built for, but it's cold storage now. This weird thoroughfare makes a perfectly good guard checkpoint. This big hole in the floor might have been used for casting spells at some point, but now it's a garbage dump. In this way, it's easy to come up with what rooms are now that doesn't require you to answer what a room was built for.

Using this approach, you still want to have good answers for what a room's original purpose was some of the time. If the space just never makes sense, players will stop trying to engage with it logically, and that's a big loss. Plus, using this effect most effectively, you get a lot of value out of knowing the previous purpose of a room. It can be easy for every kitchen to feel similar, but a kitchen that's been built on what used to be a foundry is instantly more interesting and easier to get creative with. But you get to pick and choose the parts of a random layout that look interesting, or that you have an easy time answering for, and make those the parts where the original purpose shines through. And then in the spaces where you're left saying, "What is with this snarl of hallways?" you can just have the answer be, "it's a mystery. Scholars theorize it served a ritual purpose."

67 notes

·

View notes

Note

Could the gods cast a spell to transform themselves into the size/shape of a normal pony? Or create a separate body for themself that they can see and move out of while their main body still walks the land?

How would they react to the possibility of such spell?

Sort of! They can train themselves to cast an illusion of themself walking among ponies, but it's harder to use the illusion as ears and eyes, so it's more like trying to interact with a nest of ants by poking them with a needle.

While it's possible to create a whole separate body, that's some really uncontrollable magic there, and very likely to go wrong. All the power in the universe can't make a flawed spell perfect.

Twilight is the most likely to employ this sort of spell, and also the most likely to screw it up. There may or may not be some sort of evil shade version of her running around from a failed attempt!

Every spell has to be written carefully. The more complicated the spell, the more thinking and details must go into it before the casting. A safe but complex spell would be to make a miniature version of yourself, then rig it with sense-channels that transmit input (eyesight and hearing) into your own eyes and ears, and then also have outputs speech, touch) that are linked to your own. Yet another layer of complexity would be separating your actions from the avatar's, so when your spell speaks, your god-voice doesn't ring out across the land. And you need to allow it to walk around and touch things without controlling it mech-pilot style and stomping all over the anthill with your giant god-hooves.

So while all of them could technically cast a spell, this one is extremely complicated to create and upkeep. And don't get me started on trying to cloak your god-body to hide it from view! It's more likely to end up scaring whoever you're trying to talk to.

#ask#shire draws mlp#princess luna#twilight sparkle#ssg gods#ssg alicorns#skyscraper gods#ssg twilight#ssg twilight sparkle#ssg luna#skyscaper gods lore#art#shire draws

301 notes

·

View notes

Text

as a general maxim, when you make art you wanna create contrasts: detail vs simplicity, saturated colours vs grey, organic shapes vs inorganic, long paragraphs vs short, colloquial language vs formal, loud vs quiet, fullness vs emptiness

and you also want to work that on a meta level as well. contrasting between things being the same and things being different. repeating the same thing over and over feels homogeneous but so does everything constantly changing. a mix of some things coming back around and some things being novel keeps you guessing.

and keeps you guessing is an important thing - a purely regular pattern with no variation doesn't tend to elicit as much interest, except when it's placed in contrast to other artworks.

recently in the last few decades it seems that, probably inspired by the parallel discourses of machine learning, neuroscienctists have cooked up a kind of 'thermodynamics-style' theory of how the brain works with what they call the 'free energy principle', which casts the brain as being in a cycle of constantly generating predictions and testing them against sensory input in order to refine its internal model. here's artem kirsanov, a guy who makes pretty good videos on ML theory, with a neat visual summary:

youtube

why do they call it free energy? I guess they're following in the footsteps of Claude Shannon in borrowing names from thermodynamics for similar-looking formulae. (and in fact, Shannon entropy ends up playing a role here.)

I will need to dig more into this to really pierce the mathematical formalism and jargon in this hypothesis; presently I'm reacting to a surface summary and vibes. but musing on the idea, I think this tells quite a cute story about the above maxim for art: things that are hard to predict force us to do more work to develop our internal model, so they provoke us; but there does need to be something to predict, because it's also saisfying to resolve a pattern in the noise.

the interplay of surprise, recognition, and learning, by this theory, somehow drives how we feel about the art, from the feeling of intrigue when you see an intricate visual composition, to the emotional impact that comes from a long-teased resolution in music.

to add a wrinkle to this, apparently individual neurons respond positively to rhythmic stimulation, such that if you're trying to grow a physical neural net, that's how you 'reward' neurons for giving good outputs. (I believe it came up in this video where a youtuber tried to grow some neurons to play Doom.) repeated patterns are in a sense the 'least surprising' input. not 100% sure how that vibes with this theory tbh, I feel like there's a lot of ways you could fit those two concepts together.

music is a very 'pure' form of art in this sense: you establish clearly recognisable patterns and then vary them just before they start to get boring.

games, on the other hand, engage our direct interactive feedback: we can try things and see if the game responds in the way we expect, and in so doing, elaborate an internal model of the game world.

I think this also gives me a lens on my autistic difficulties with social interaction: until I learn enough of the social 'game' in a particular space to understand how the things I say are likely to be received, I tend to feel quite anxious. but once I start to get to know people and get a basic model and feel that I'm not likely to put my foot in it too badly, the feeling flips quite dramatically; it becomes exciting to meet people and learn about all the very specific things they're passionate about.

I don't wanna go too far with this, I distrust 'everything reduces to this simple formula' metaphors, but I formed a connection and now I'm telling you about it!!!

#introspective nightposting#philosophy#ai#not quite sure which of my existing boxes to put this subject in so it gets all three#neuroscience#Youtube

29 notes

·

View notes

Text

When writing feels stale and unfamiliar...

Ever be trying to finish a WIP, update a fanfic, or push out something for school, but writing just suddenly feel so stale, almost like your own writing doesn't sound like you...

Well I'm here to help you break that cycle!

This feeling usually happens when either two things happen

Imposter Syndrome

or

You're Simply Improving

That's right, it's not you doing something wrong, you're just being a skilled writer. How do we get out of this dull feeling though? There's a lot of different tactics you can try. Here's some potential options.

Read your own writing

Read your own writing. Do it! Not only does it cause you to realize how much you like your own writing, it forces you to refresh. it's a great way to remember where you were going in a piece, and any potential themes or writing style you accidentally dropped. It makes it easier to pick up some lost mistakes too, and discover some places to improve.

(this one works twice as well after a break or some passage of time :D)

Change of Atmosphere

Not exactly a change of location, but instead a change of atmosphere. Play a new lo-fi playlist, try writing without any sound at all, indulge in thematic video essays in the background. Sometimes in order to regain focus the atmosphere needs to change.

Consume New Content

This goes for all creatives who are stumped. To output constant creative creations and ideas you require an input in the first place. For some people it may take awhile before they feel the brunt of creative block, but it will eventually come for all. The best advice I can give is to consume new media. Read a new book, watch a TV show, play a video game, find a new artist you like. This is all input. Input becomes output.

Take A Break

Not everyone has the privilege to do this one, but if you do, don't turn your nose from it. Taking a break isn't exactly something everyone does creative-wise, especially those who deal with a quick working skill regression. I've been there.

Taking a break from something is a great way to make it feel fresh when you return. Breaks don't have to be long. Depending on what calculates as a break for you is what you should strive for (even if it's only a day or two).

Acceptance

Accept that you are not going to create the top most, polished stuff 24/7 Every artist and writer creates and dumps ton of unfinished art, erases pieces, and makes lots of mistakes. Often times the best work that we admire takes a lot of time, refinement, practice, and drafts. Sometimes we just have to write badly in order to come back and do it again good.

65 notes

·

View notes

Text

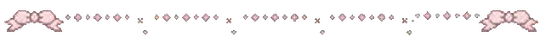

automatic shader node configuration

LOOK AT MY BEAUTIFUL NODE TREES 😭 I wrote a thing and now my shader trees are so tidy!!!

It takes a little work to get models exported from TS4SimRipper to play nice in Blender. The materials don't like to import nicely, and cause issues that at first I handled manually.

Specular texture node is loaded but not attached to the Principled BSDF shader

Normal map does not import at all and requires some fussing to get working - the normal map texture is half the size of the diffuse/specular textures

Glass layer base textures have color but not alpha outputs

Emission maps are not attached (and do not seem to export correctly - I always just get an empty file)

I'm not sure if I'm using SimRipper wrong? But in any case, I found solutions to the first three problems and wrote a script to do those things for me!

The Specular IOR Level node that is created automatically when importing a sims .dae file needs to have its alpha output connected to the shader's Specular IOR Level input

You need a normal map node connected to the shader's normal input, a vector mapping node attached to the normal map's color input with the scale set to (2,2,2) to account for the smaller size of the normal map textures, and finally create an image texture node for the normal map - attach the color output to the mapping node's vector input

For glass layers, the alpha output of the base color node needs to connect to the shader's alpha input - this is what makes glass layers transparent

AND I figured out how to get all the nodes aligned and pretty!!! I'm ridiculously proud of that part.

Setting all that up by hand is tedious and time-consuming, so I wrote this script. I'm working on getting it to a point where you don't need to run it through the scripting tab for ease of use, but in its current state it is definitely workable! At the moment it only works with SimRipper exports with either single mesh & texture or solid and glass meshes and textures - it does NOT work with all separate meshes/1 texture. You do need to have some version of Blender 4 for it to work - 3.6 is not compatible.

If any brave soul is willing to give it a try, I have a working draft up here! Open it in your scripting tab, select the collection in the outliner you want the model to go to, and then run the script. Choose a .dae file that was exported either with 1 mesh/1 texture OR solid and glass meshes & textures, and you should be good to go! You should be able to move/pose/etc the model just like you would any other model imported from TS4SimRipper.

There's still some manual configuration required. I haven't figured out how to handle the emission map problem yet, and while I normally put subsurf modifiers on most of my models I haven't included that in the script yet, as that requires manually fixing a issue with a vertex on the inside of the head that causes ugly shadows on the neck/cheeks/hairline when you render with Cycles.

Please let me know if you have any questions or problems! I have tested this extensively on my computer, running Blender 4.3, but have not yet attempted it with any other computer.

18 notes

·

View notes

Text

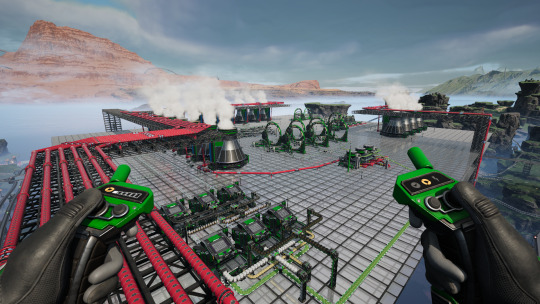

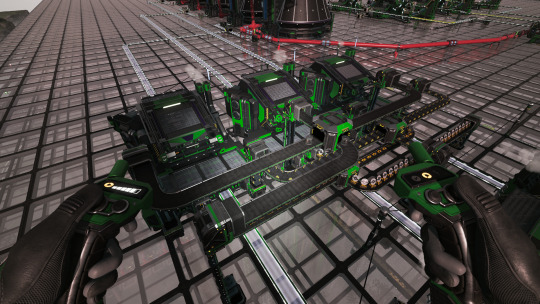

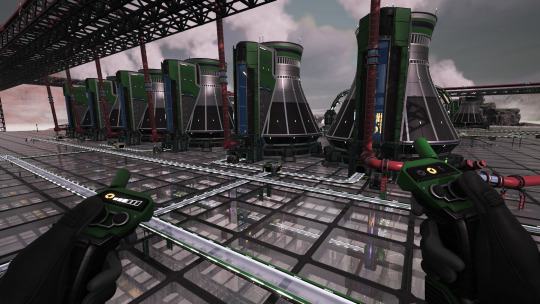

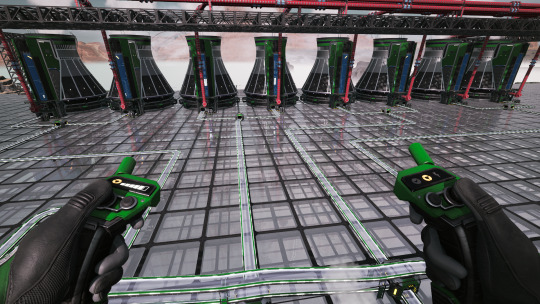

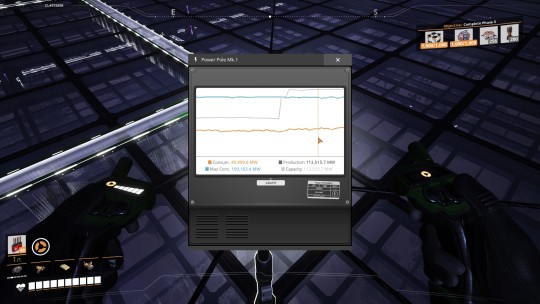

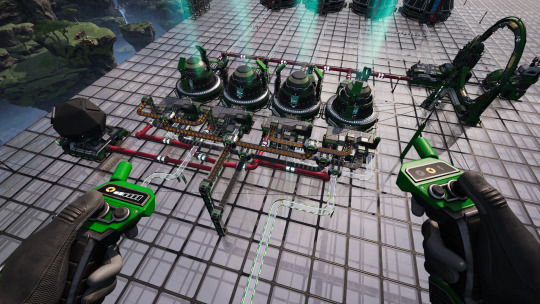

Satisfactory: The Full Ficsonium-Chain Nuclear Power Plant

Okay.

So.

I did it.

The thing I love doing most in Satisfactory is creating giant power plants that give me way more power than I need so I don't have to worry about my overhead while making other things. It's the thing that really captured and hooked me into a loyal player.

In the 1.0 release, they added a way to fully engage your uranium resources and make plutonium power without unsinkable waste product, by adding a third step called Ficsonium.

And so the gauntlet was thrown down.

Starting out, making this all work looked impossible. But slowly, I whittled away at it, optimized it, until I had a workable plan. And now that I've built it, I'm going to subject you all to the write-up.

93.75 Uranium, 93.75 Sulfur, 56.25 Silica, and 281.25 Quickwire goes into 3 Manufacturers (overclocked to 125%) to make 75 Encased Uranium Cells (using the Infused Uranium Cell alternate recipe).

(All material numbers are in parts-per-minute, by the way, in case that confuses anybody.)

Those E.U. Cells go straight into 3 more Manufacturers (again, overclocked to 125%, and the Cell outputs/inputs are 1:1), along with 7.5 Electromagnetic Control Rods, 2.25 Crystal Oscillators, and 7.5 Rotors to make 2.25 Uranium Fuel Rods (using the Uranium Fuel Unit alternate recipe).

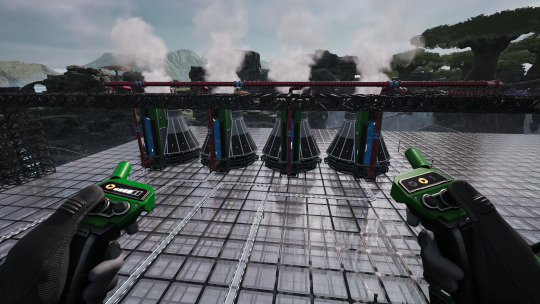

The Uranium Fuel Rods are fed into 6 Nuclear Power Plants (each overclocked to 187.5%, so the equivalent of 11.25 power plants). Each fuel rod Manufacturer splits its outputs to two of the power plants. The power plants are fed with 450 cubic meters of water each from the framework above (except for the nearest one, which is also taking water byproduct from the next stage). Together, the uranium power plants produce 28,125 MW of electrical power and 112.5 Uranium Waste.

The Uranium Waste is quickly conveyed beneath the floor over to three Blenders (overclocked to 150%), along with another 112.5 raw Uranium, 67.5 Nitric Acid, and 112.5 Sulfuric Acid, to make 450 Non-Fissile Uranium (using the Fertile Uranium alternate recipe). This also produces 180 water byproduct, which is fed back into the first uranium power plant.

Each Blender feeds into its own row of 2 Particle Accelerators, for a total of 6. (No overclocking here, for once!) The Non-Fissile Uranium is mixed with 60 total Aluminum Casings to produce 60 Encased Plutonium Cells (using the Instant Plutonium Cell alternate recipe).

The Encased Plutonium Cells are fed into 4 Manufacturers (overclocked to 200% - and we're back), along with 36 Steel Beams, 12 Electromagnetic Control Rods, and 20 Heat Sinks, to make a whole whopping 2 Plutonium Fuel Rods!

(Everything up to this point is considered "Stage One" of the overall nuclear power plant. At this juncture, I left the remaining power plants unconnected and simply destroyed the Plutonium Fuel Rods in the AWESOME Sink while I took a break and worked on other parts with the electrical power the uranium plants alone were giving me. Everything after this is considered "Stage Two," the even more complicated part.)

Once the rest of the chain was ready, I connected the Plutonium Fuel Rod Manufacturer outputs to 8 more nuclear power plants (overclocked to 250%, effectively 20 power plants!), 2 for each line. Each plant gets 600 water from overhead as well. This alone gives me another 50,000 MW of power!

...But it also produces 20 Plutonium Waste, which until 1.0 was completely indestructible and had to be stored away in a dedicated dump indefinitely.

(Sure, people have done the math. With enough space, you can buy yourself literal months of in-game uptime before your dump fills up with waste and the power plants become inoperable, in exchange for writing off that part of the map. But why give yourself any such hassle at all if you don't have to? Sustainability is a part of efficiency!)

The Plutonium Waste is brought over to 2 Particle Accelerators and combined with 20 Singularity Cells and 400 Dark Matter Residue (neither of which are cheap!) to make 20 Ficsonium.

The Ficsonium, 20 Electromagnetic Control Rods (again! so many!), 400 Ficsite Trigons (!!!), and 200 Excited Photonic Matter (dirt simple, actually - phew!) are fed into 4 Quantum Encoders to make 10 Ficsonium Fuel Rods. This also produces 200 Dark Matter Residue as a byproduct, which I put into a Particle Accelerator to make Dark Matter Crystals that I just toss into an AWESOME Sink.

(If you're smart, you could just loop the byproduct here back into the previous step and cut that input in half, but... I just could not be bothered this time. I wanted this plant fully operational as soon as possible.)

And finally, the Ficsonium Fuel Rods are sent directly from the Quantum Encoders to 4 Nuclear Power Plants (overclocked to 250% again, effectively 10 power plants) and mixed with 600 overhead water each to produce another 25,000 MW of power and absolutely zero waste or byproduct.

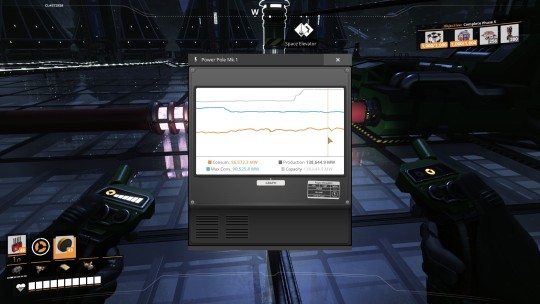

For a grand total of... 103,125 MW!

(And that's before adding bonus power from Alien Power Augmenters later!)

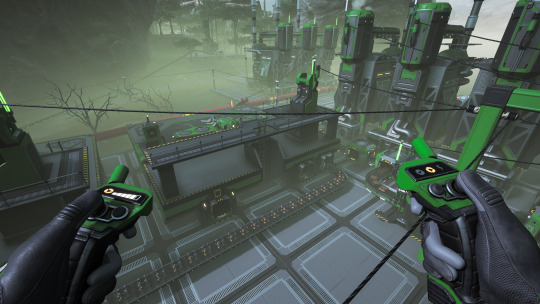

This was a massive build that took up the entire swamp region of the map (because to hell with the swamp, all my homies hate the swamp and their eldritch-moving alpha spiders). There's an interim layer of the platform for "spaghetti," or conveying the materials to where they need to go, and then just about everything needed for this process was made from scratch from within the swamp or close-by-ish.

With one exception: I drone'd in Pressure Conversion Cubes that I was making elsewhere for the Nuclear Pasta that's required for the Singularity Cells. Having to make Radio Control Units and Fused Modular Frames from scratch on top of everything else just would've tipped this over the breaking point for me personally.

And that's that. Probably the biggest power plant I'll ever want to make in Satisfactory, at least on my own in single-player. I've taken the gauntlet and thrown it back. And now I have more than enough power to finish the rest of the game.

Thanks for reading! I worked way too hard on this whole project.

(Also, for those who may be looking at this flow and thinking, "Holy crap, you'll need hundreds of Reanimated SAM and thus thousands of SAM!" - here's a pro tip: Somersloops. Overclock the Constructors making your Reanimated SAM to max and then throw in 1 Somersloop. Instantly improves the recipe from 4:1 to 2:1. I'm dead certain this was an intended part of SAM balancing, because holy crap there's just not enough of it on the map otherwise.)

#satisfactory#satisfactory game#satisfactory screenshot#nuclear power#nuclear energy#factory post-mortem write-up#please come look I worked so hard on this#shouting into the void

27 notes

·

View notes

Text

✧・゜: how i'm learning to trust my creative intuition :・゜✧:・゜✧

hey lovelies! ✨

i've been thinking a lot about creative intuition lately, that quiet inner voice that nudges you toward certain ideas or projects. for the longest time, i was absolutely terrible at listening to it. i'd get these little sparks of inspiration and immediately talk myself out of them. "that's been done before" or "you don't have the skills for that" or my personal favorite: "who do you think you are?"

sound familiar? thought so.

the thing is, i've slowly been learning that my intuition actually knows what it's talking about. those random ideas that pop into my head at 2am or while i'm in the shower? they're not random at all, they're my creative compass trying to guide me toward what truly lights me up.

⋆.ೃ࿔:・ recognizing intuition vs. fear ・:࿔ೃ.⋆

the first big challenge was learning to tell the difference between my intuition and my fear. they can sound weirdly similar sometimes!

my intuition tends to feel like excitement mixed with certainty, like "yes! this!" even when it makes no logical sense. it feels light and expansive, like opening a window in a stuffy room.

fear, on the other hand, feels heavy and contracted. it comes with a lot of "shoulds" and worrying about what other people will think. it's the voice that compares my chapter 1 to someone else's chapter 20.

i started keeping track of when these different voices would speak up, and slowly got better at recognizing which was which.

⋆.ೃ࿔:・ creating space to listen ・:࿔ೃ.⋆

intuition doesn't shout. it whispers. and in our noisy, constantly-connected world, those whispers can get completely drowned out.

i realized i needed to create actual space to hear myself think. for me, that looks like:

morning pages: three pages of stream-of-consciousness writing before looking at my phone

solo walks without podcasts or music (just me and my thoughts)

intentional boredom: staring out windows, lying on the floor, letting my mind wander

reducing input before trying to create output (no scrolling before creative sessions)

it's amazing what starts to bubble up when you're not constantly drowning it out with other people's voices and ideas.

⋆.ೃ࿔:・ the "stupid idea" notebook ・:࿔ೃ.⋆

one of the most helpful tools has been my "stupid idea" notebook, a judgment-free zone where i write down every creative impulse, no matter how ridiculous it seems.

the name is intentionally silly to remind myself not to take it all so seriously. some ideas truly are stupid, and that's perfectly fine! but some turn out to be the beginnings of something meaningful.

the rule is simple: write it all down, evaluate later. this creates a safe space for intuition to speak without immediately being shut down by my inner critic.

⋆.ೃ࿔:・ small intuition experiments ・:࿔ೃ.⋆

trusting your intuition is like building a muscle, you start small and work your way up.

i began with low-stakes creative decisions: which color to use in a drawing, which topic to write about in my journal, which route to take on my walk. when something felt intuitively "right," i'd go with it, even if i couldn't explain why.

gradually, i started trusting my intuition with bigger choices: which project to pursue, which opportunities to say yes to, which creative direction to explore.

with each small win, my confidence in my inner guidance grew stronger.

⋆.ೃ࿔:・ embracing the "wrong" turns ・:࿔ೃ.⋆

here's the thing about intuition: sometimes it leads you down paths that seem to go nowhere. i've followed creative impulses that resulted in projects i never finished or ideas that didn't work out.

but i'm learning that these aren't failures, they're necessary detours. every "wrong" turn teaches me something i needed to learn or leads me to connections i wouldn't have made otherwise.

intuition isn't finding the most direct path; it's finding YOUR path, with all its twists and surprises.

⋆.ೃ࿔:・ letting go of external validation ・:࿔ೃ.⋆

perhaps the hardest part of trusting my creative intuition has been detaching from external validation. when you follow your intuition, you might create things that don't immediately resonate with others or fit neatly into what's trending.

i'm still working on this one, honestly. but i've noticed that my most intuitive creations... the ones that felt most aligned with my inner voice, are ultimately the ones people connect with most deeply, even if the audience is smaller.

⋆.ೃ࿔:・ a gentle practice ・:࿔ೃ.⋆

trusting your creative intuition isn't a destination, it's an ongoing practice. some days i'm better at it than others. sometimes fear still wins. but each time i choose to listen to that quiet inner knowing, it gets a little louder, a little clearer.

if you're struggling to trust your own creative voice, start small. create tiny spaces of silence. write down the whispers. follow the sparks of excitement. and be patient with yourself when you forget.

xoxo, mindy 🤍

#creative intuition#creative process#creativity tips#creative journey#trusting yourself#creative inspiration#creative blocks#creative confidence#artistic journey#creative mindset#creative growth#creative voice#creative practice#creative development#artistic intuition#creative authenticity#creative self-trust#inner voice#creative guidance#intuitive creativity#creative expression#finding inspiration#creative flow#personal growth#self discovery#creative identity#artistic development#creative wisdom#creative struggles#overcoming creative blocks

48 notes

·

View notes

Text

I am finally getting a 3D printer again after 2 years (thank you again sweetheart), it's an EasyThreed K9. Full disclosure this is considered a cheap hobbyist toy but that's definitely good enough for my needs which at the moment are primarily prototyping enclosures quickly.

Also in getting news I got in another Lilygo board, the FabGL VGA32 which is made to work with FabGL:

This library plus the board makes it so we can create VGA/headphone jack output and use PS/2 mouse/keyboard input with an esp32 at the core. Which will form the basis of demoscene 3D audiovisual work I'll be doing with another sweetheart.

21 notes

·

View notes

Note

is a crankshaft inert?

sorry if this doesnt make sense but like you said the crankshaft moves the pistons n then the pistons keep the crankshaft moving so what starts the crankshaft moving to start with?

also why would an engine suddenly die if the pistons keep it moving?

Ok trying this again. Sorry I deleted the previous response on accident and then immediately has the desire to commit violence. That'll teach me for trying to answer a long post on mobile.

Anyway!

1. Yes.

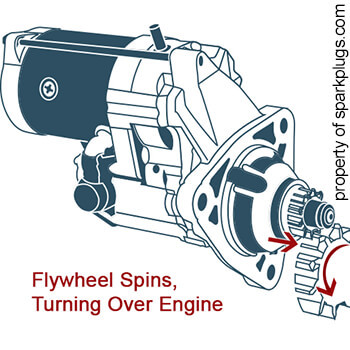

2. This is a starter motor and flywheel.

In modern cars (we're pretending for a minute that handcranks don't exist) when you turn the key in the ignition, the ignition coils take low battery output, convert it to high power input through magnetic coils, and provide that power to the starter motor. The starter motor (pictured above) engages the flywheel, which is connected to the crankshaft. The crankshaft then turns in time with the camshaft (connected by the timing belt) which releases a vaporised mixture of air and petrol into the cylinder. As the crankshaft rotates, the belt makes the camshaft close its valves, the crankshaft moves the piston up which creates compression in the engine, and then the sparkplug ignites the vapour and starts the engine. The piston is then forced downward by the explosion, rotating the camshaft, and so the next piston repeats the same process according to firing order (which varies from engine to engine).

All the handcrank does it replace the job of the starter motor and the flywheel. Instead of electricity forcing the engine to turn over, the handcrank lets you turn the engine over manually on your own.

3. That depends. There's actually a few reasons an engine can die suddenly.

Loss of accelerant. "I know what's wrong with it! It ain't got no gas in it!" This can be anything from you ran out of petrol to your fuel lines being clogged. Remember folks—never buy petrol if the fuel trucks are refilling while you're there. When they reload the fuel into the tanks it stirs up sediment and if you fill your car you might get some of that sediment into your tank. If it gets sucked up it can clog your lines and kill your engine.

Loss of compression. This can be a blown head gasket (which is bad news and most likely to result in an all-around dead engine) or a blown piston. Blown pistons are worse as they have the capacity to damage everything else in the engine. You can also lose compression from cracks in the engine block itself, which is worst news cuz you can't fix that.

Overheating. Metal warps when it gets hot, and too much heat means the pistons don't seal the way they should (or might blow your gaskets). This results in loss of compression and a dead (or weak) engine. Sometimes it can make your engine explode.

Electrical issues. If your alternator has failed then your car battery will drain itself trying to provide power to the sparkplugs (which you need to combust the vapour in the engine). A COMPLETELY dead battery means no sparky to your sparkinators, which means no combustion and therefore the engine won't work. You can also get dead sparkplugs (they wear out) which means the vapour won't combust.

Loss of air. Engines need oxygen to vaporise the petrol, and fire needs oxygen to burn. Without air the engine will die. This is why off-roading vehicles often have snorkels—so the engine can still breathe even if it's submerged. This is also why the only way to stop a runaway diesel is by obstructing the air intake (usually by chucking a rag into the intake).

Retarded timing belt. As we went over earlier, the timing belt is what connects your crankshaft and camshaft. They're meant to move at a certain pace to each other. If that's interrupted in any way (the belt has worn and stretched with time and the teeth don't make contact the way they used to, or the belt has slipped off slightly) then the camshaft and crankshaft won't be aligned, and the fuel won't be released when it needs to be. If the misalignment is bad enough it can kill your engine entirely as the piston will be compressing nothing but air, so no explosion happens. Or, since the timing belt controls when the sparkplugs spark (through the distributor), it can mean that the spark happens when there's low compression in the engine, resulting in poor combustion and a weak (or even dead) engine.

Backup of fumes. Say your catalytic converter (or muffler or tailpipe) gets clogged somehow. Those fumes will back up, to the engine, not allow the resident gases to escape, and will kill your engine. Sometimes it'll make the engine or the backed up part explode, depending on your engine's horsepower (and how much air pressure it's putting out) and where exactly the blockage is.

I'll be here all day.

#blu whos#ford blu#tw r slur#r slur#I'm tagging that so people triggered by the word don't have to see it used in any context

16 notes

·

View notes